AI

AI UX patterns for design systems (part 2)

In this part, we’ll go through the following patterns:

Visual Workflow Builders (Canvas)

Voice Interfaces

Prompt-to-Output / Generation Interfaces

Iterative Prompting/Feedback

Context-Aware UI

Assistants

Multi-Agent Workflows

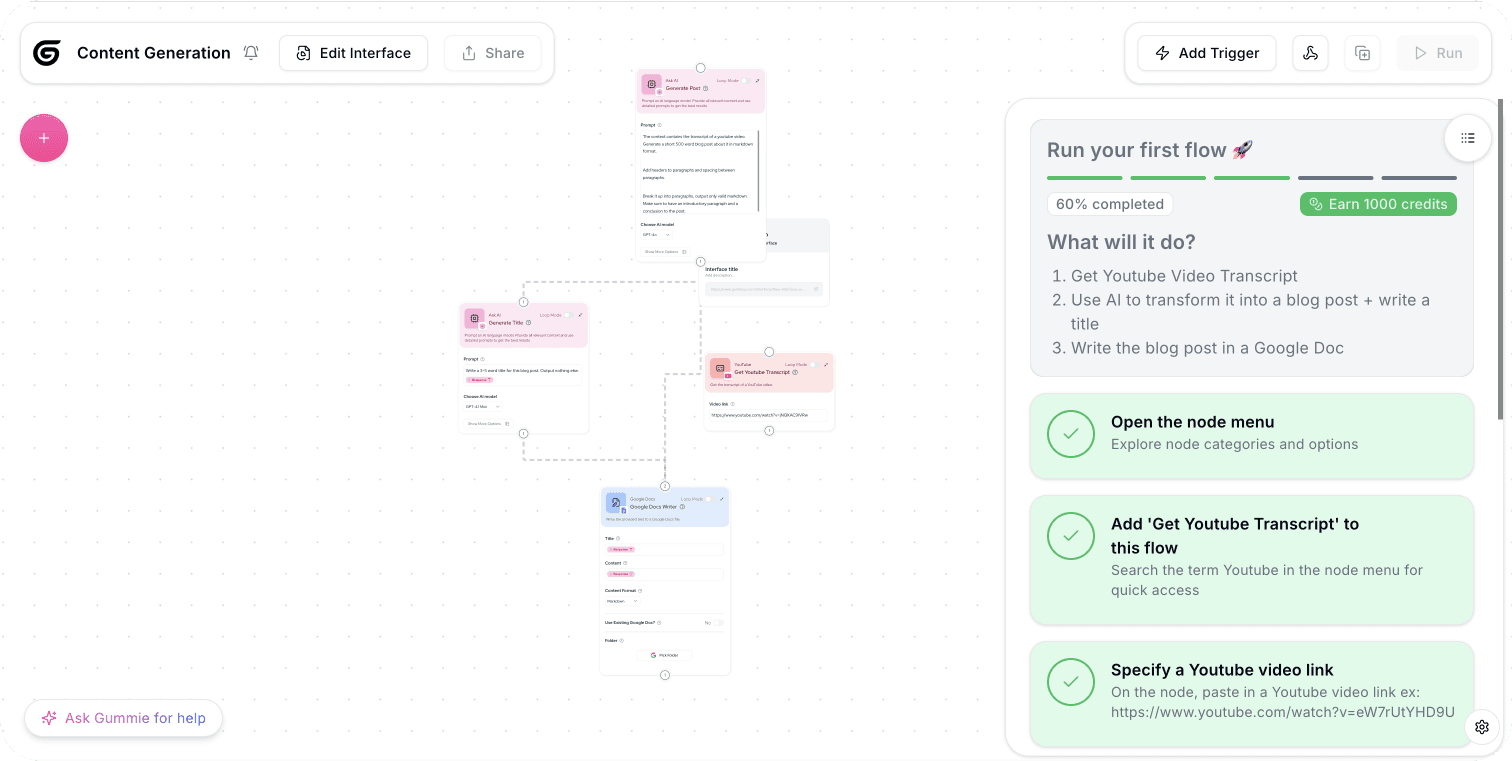

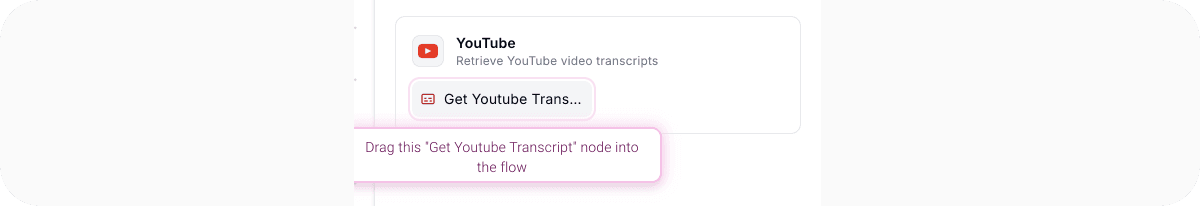

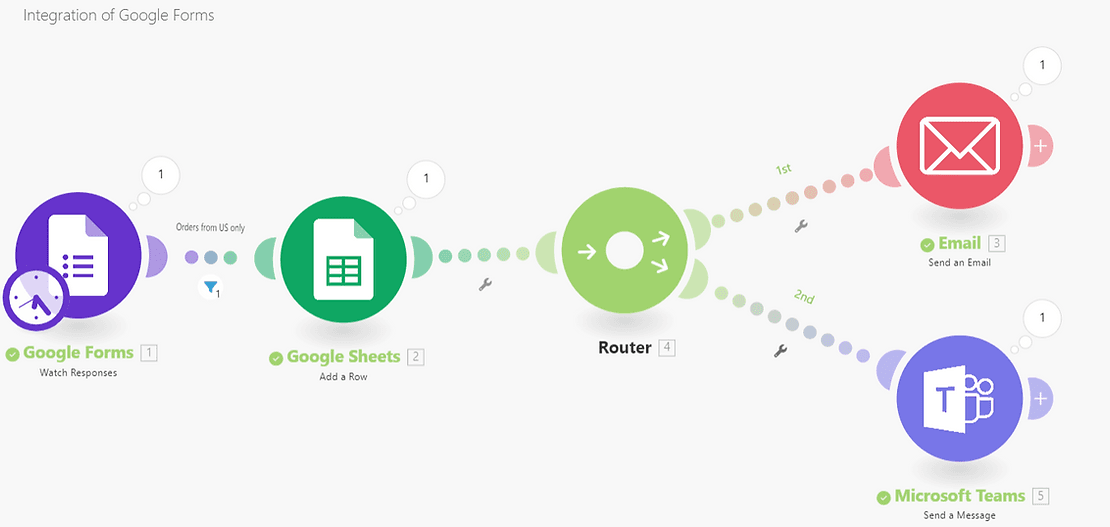

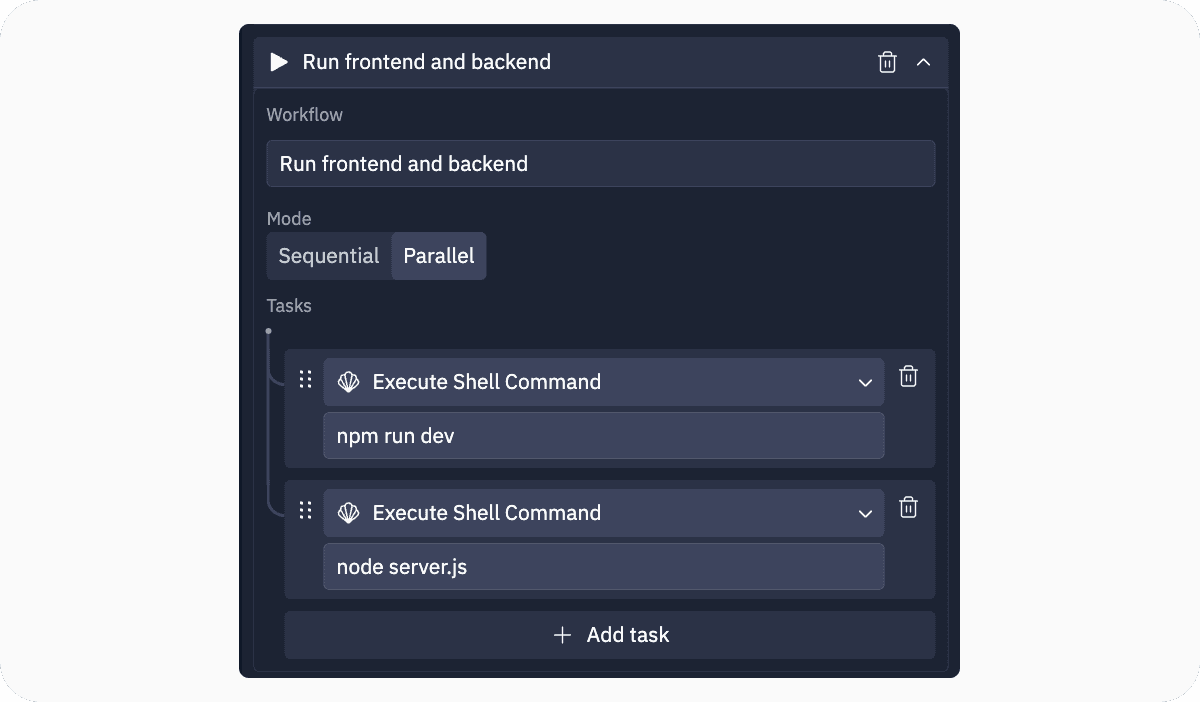

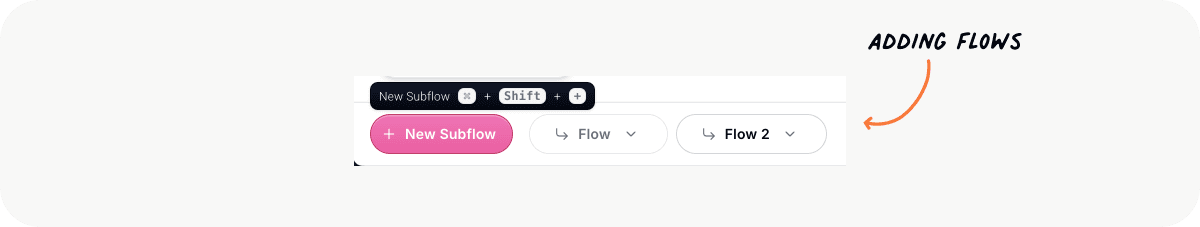

Canvas builder

Interfaces where users can connect and automate AI agents visually through drag-and-drop tools.

Examples:

Connecting a sentiment analysis model to trigger an email draft generator

Creating a voice transcription → summarization → action item extraction pipeline (Check Gumloop)

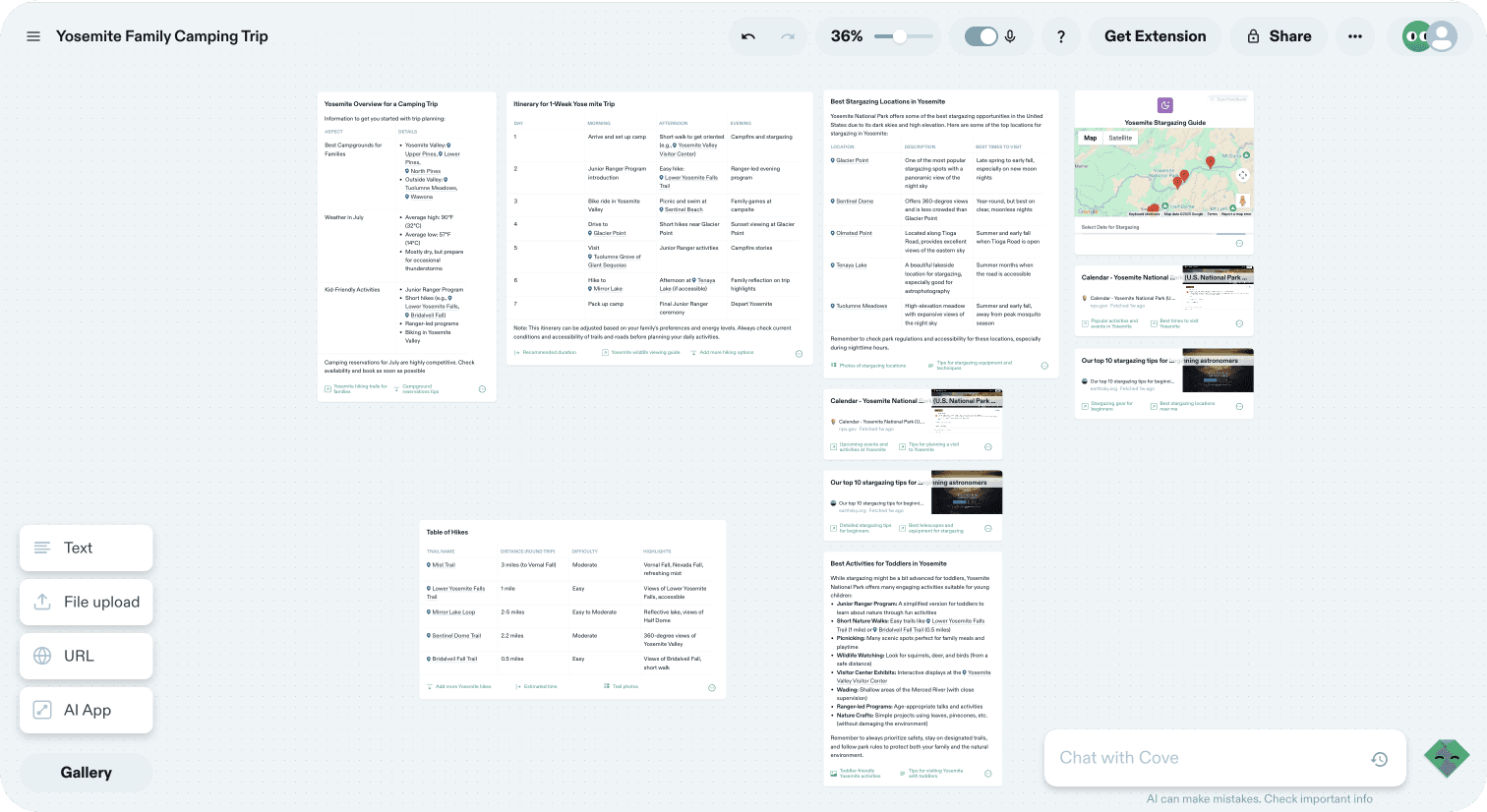

Connecting agents for creating a Yosemite family trip (Check Cove)

Tools: Make.com, Zapier AI actions, LangFlow, Cove, Gumloop

Interaction Patterns:

Drag-and-drop interfaces for connecting AI agents

Visual representation of data flow and logic

Real-time previews of AI operations

Version control for workflows

Node-specific debugging and inspection tools

Templates for common workflow patterns

Permission controls for shared workflows (every agent)

Smart connectors that handle data transformation between agents

😅 Challenges:

Visualizing processes

Balancing simplicity with advanced control (different skill levels)

Providing appropriate feedback (for single agent/node)

Ensuring consistent performance across nodes and agents

Managing compute/resource allocation

Supporting collaboration and editing (imagine multi-user editing in Figma)

Gumloop

Cove

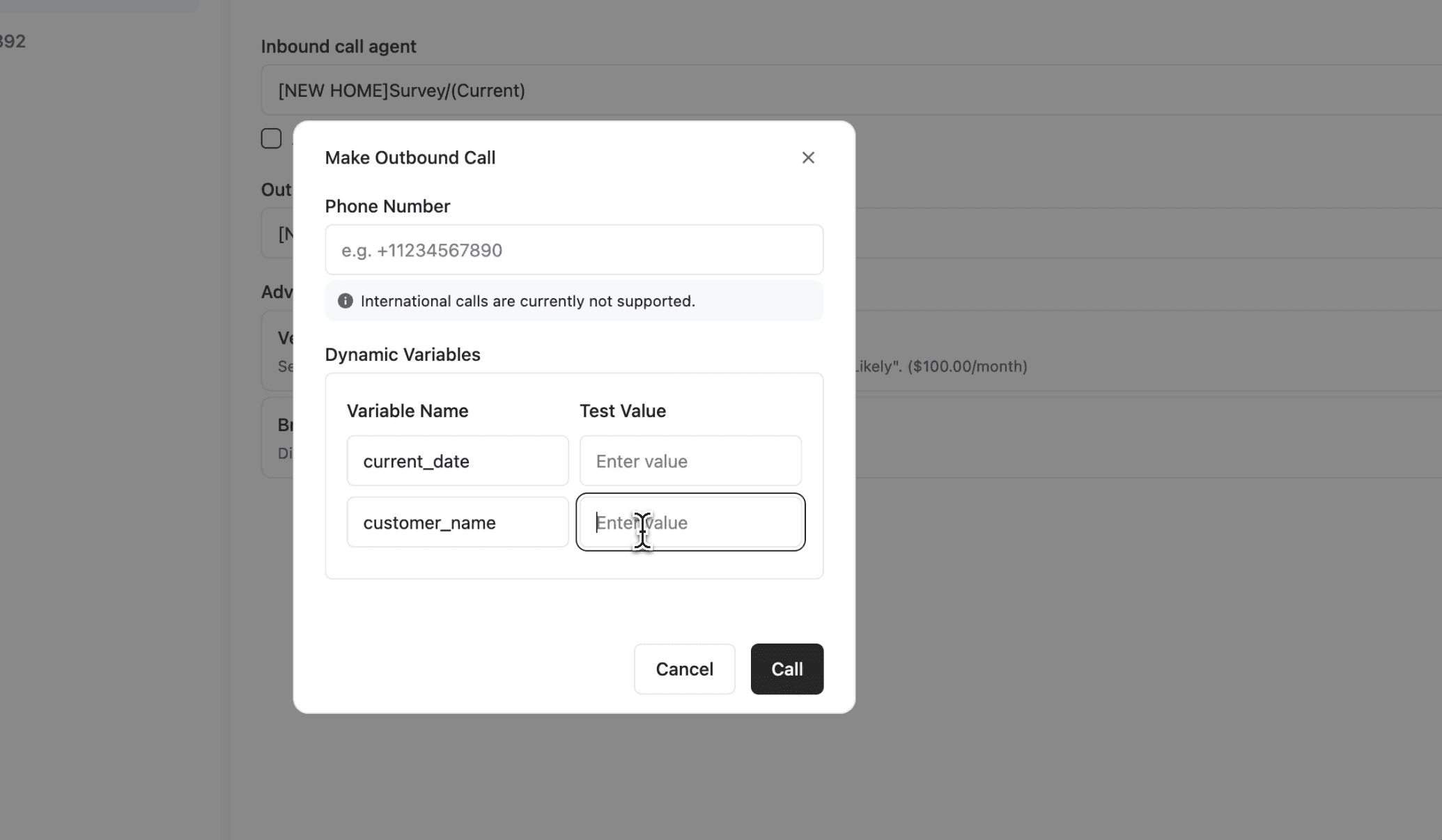

Vapi

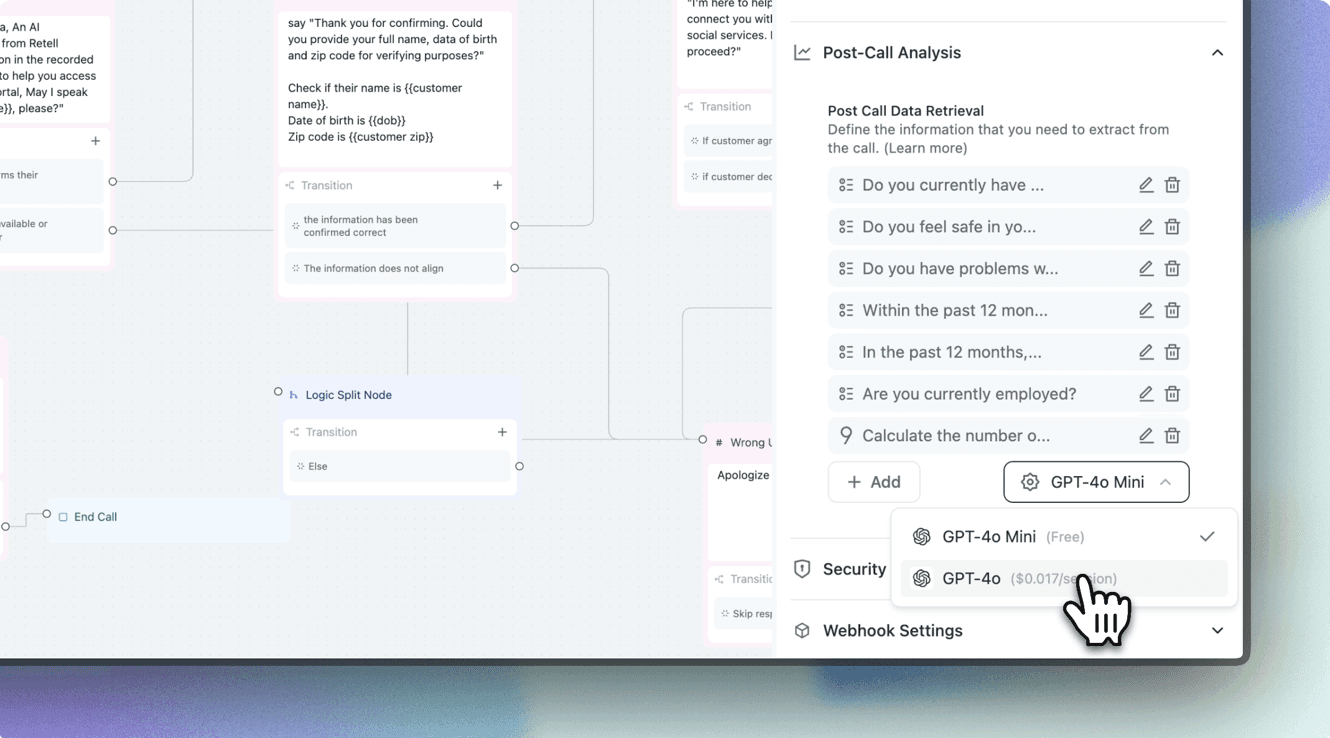

Voice Interfaces

Interfaces where users primarily interact via speech.

Examples

Hands-free workflows for warehouse or field workers

AI phone agents making calls to reschedule or collect data (Check Retell AI)

Interaction Patterns:

Wake words and clear listening indicators

Training conversation flows (and defining steps)

Interruption handling and recovery

Confirmation steps (only voice?)

Fallbacks for noisy environments or failed recognition

Visual clues for voice+UI interactions

Transcripts for visibility

Tools: Google Assistant, Retell AI, Descript (Overdub), Otter.ai, Vapi

😅 Challenges:

No visual feedback about system status or understanding

Indicator that AI is actively listening

Device mute state → failure

Latency can be frustrating (conversation can feel robotic)

Maintaining context across fragmented or broken inputs

Accessibility for users with speech impairments

Making the system’s understanding visible (supporting multi-modality)

Retell AI

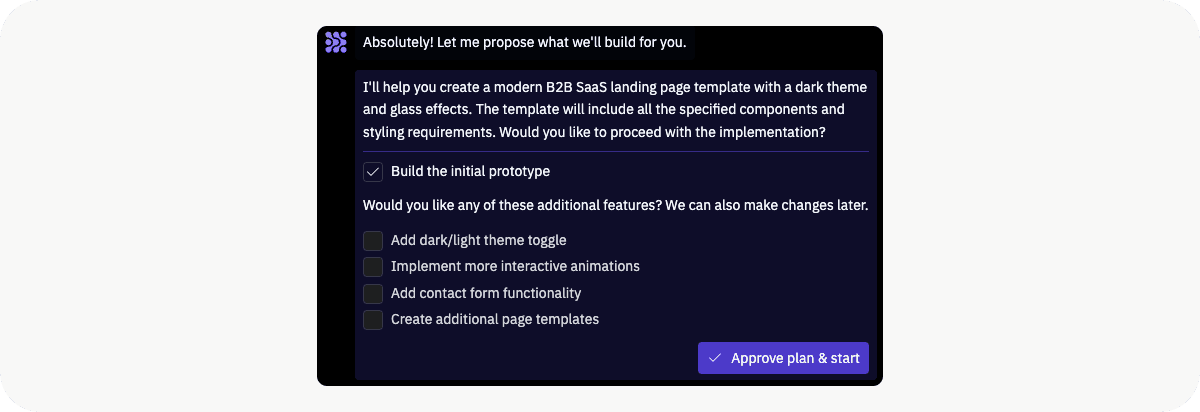

Prompt-to-Output Interfaces

Systems that generate complex content (e.g., images, videos, designs, or code) from simple prompts.

Examples:

Writing “Landing page for a fintech app” and getting a full layout

Generating a blog post from a topic prompt

Asking, “Make a 1-minute highlight reel from this 20-minute video”

Tools: Figma AI, Framer AI, Canva Magic, Notion AI, Claude, Lovable

More examples and tools here →

Interaction Patterns:

Progressive refinement controls (e.g., sliders, edit prompts)

Previews before high-fidelity generation (especially for videos → generating low-fidelity for version 1)

Cost and time estimates before processing

Libraries of templates

History tracking of successful generations

Output versioning and branching

Export in multiple formats

Loading animations during long generations or clear feedback on how much time the process will take (with notification via email when the job is done)

😅 Challenges:

Long processing times require clear progress feedback

Resource-heavy outputs may consume user credits or quotas

Initial outputs often need substantial refinement

Managing expectations about output fidelity '

Giving users meaningful control without overloading them

Allowing for low-fidelity drafts during iteration

Handling harmful, low-quality, or biased outputs safely

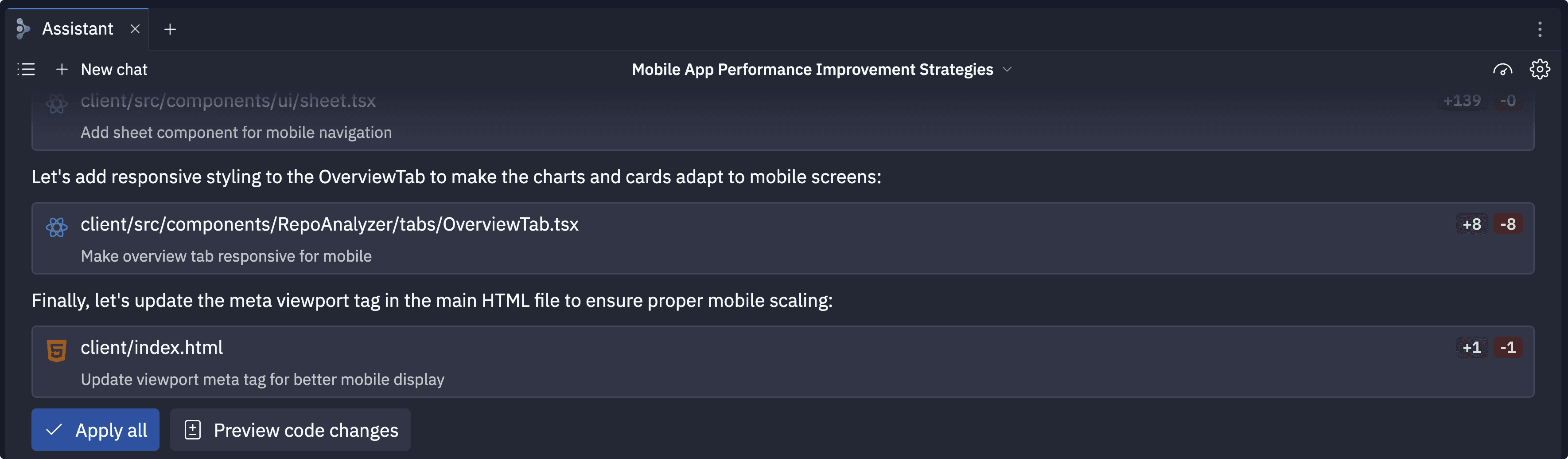

Iterative Prompting & Feedback

Interfaces where users refine generated outputs over time—with branching, feedback, or manual edits.

Examples:

Highlighting a paragraph and asking, “Change the tone… ”

Giving feedback like “More playful” or “Add stats” to a generated email

Comparing different versions of a product name and merging favorites

Tools: Figma AI (copy plugins), Canva, Framer AI, Copilot Chat, Midjourney Remix

Interaction Patterns:

Highlight-and-refine tools

Smart merging of user edits and AI generations

Version history with a visual diff

Undo/redo across versions

Inline feedback fields (e.g., “make this shorter”)

😅 Challenges:

Tracking small refinements without losing intent

Handling branching versions and edits

Encouraging exploration without wasting tokens/credits

Helping users understand what can be edited

Preventing version fatigue or loops/hallucinations

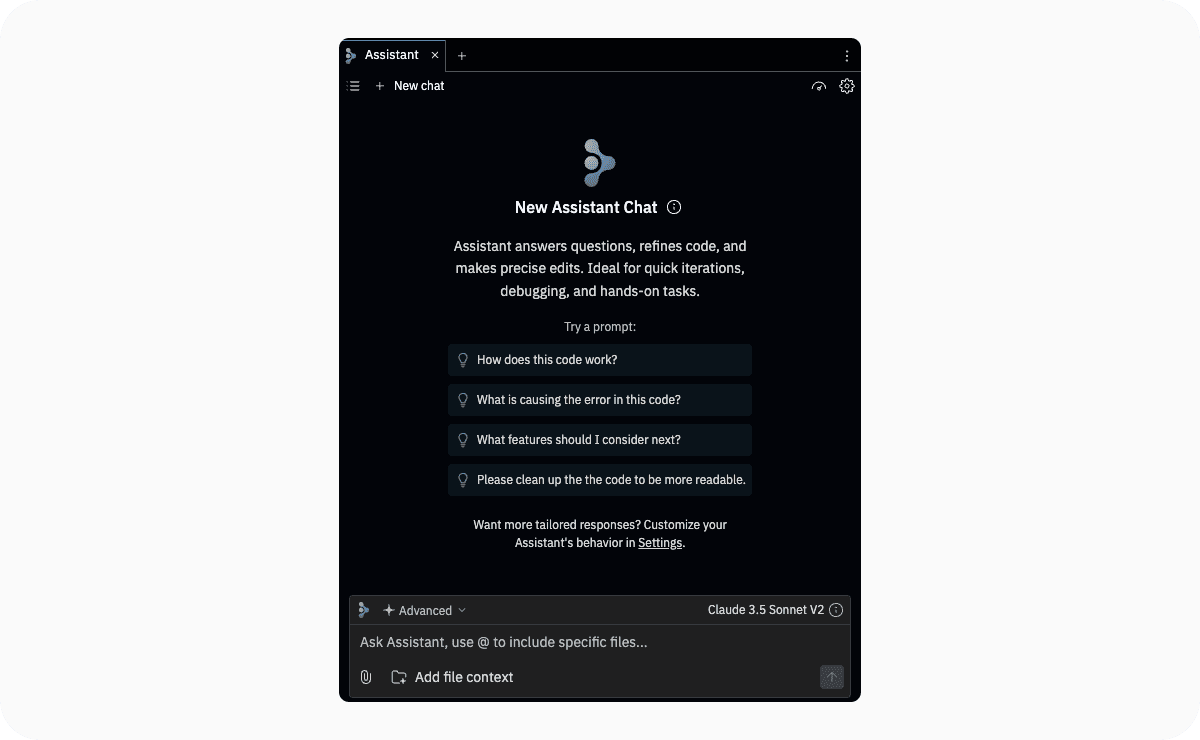

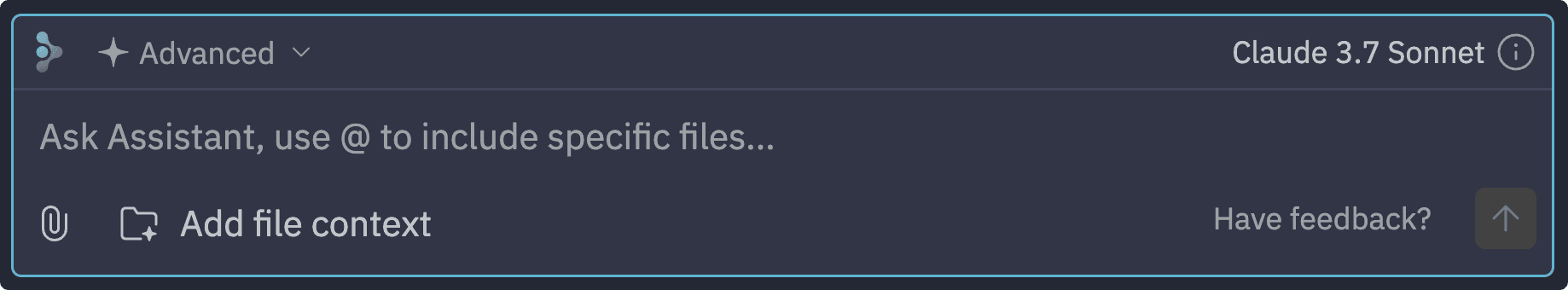

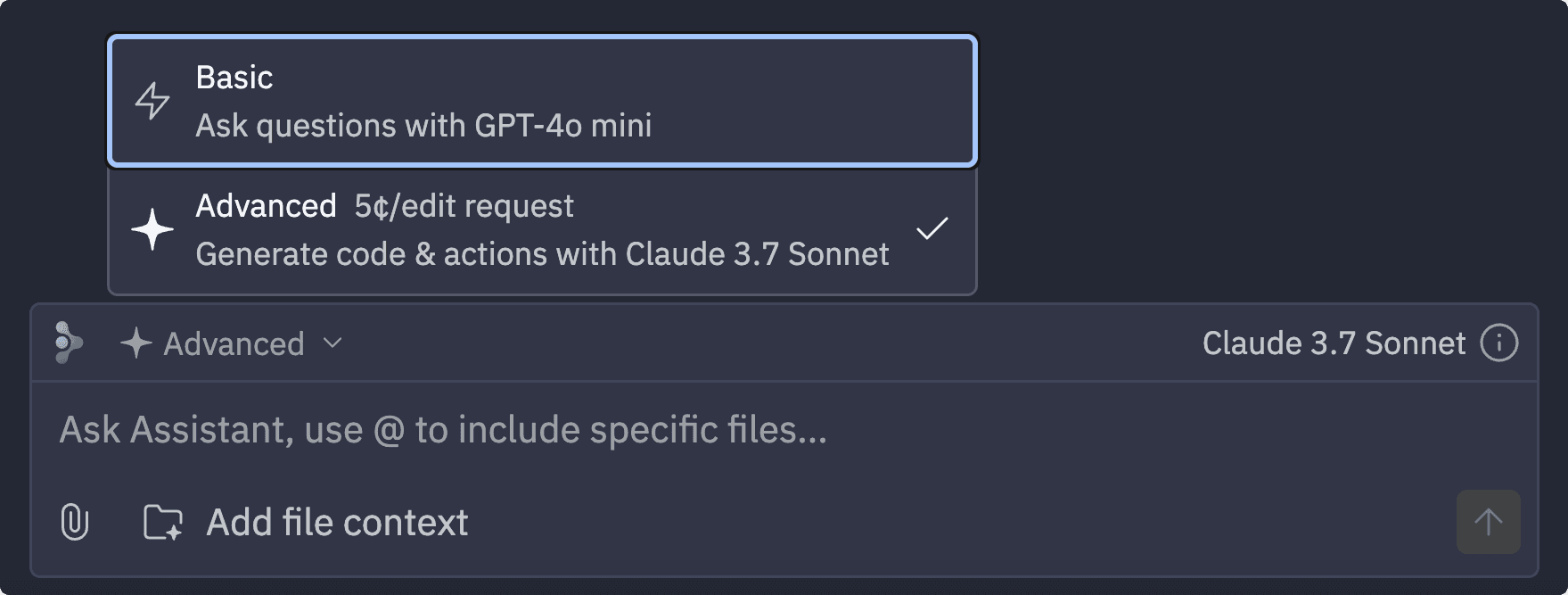

GitHub Copilot

Context-Aware Interfaces

Systems that adapt interface behavior based on user actions, context, and patterns.

Examples:

A writing tool suggesting citations when it sees “According to...”

An IDE surfacing security suggestions when writing login flows

An email tool nudging you to attach a file after writing “see attached.”

Tools: Github Copilot, GrammarlyGO, Notion AI, Superhuman, Zuni

Interaction Patterns:

Dynamic layouts

Context-sensitive help and guidance

Override options to take manual control

Interfaces that improve with repeated use

😅 Challenges:

Balancing predictability with intelligent adaptability

Managing cognitive load when interfaces shift (when to hide toolbars, etc.)

How to check UX, if layout changes based on X factors

How to respect accessibility needs

AI Assistants

Personalized, intelligent guides embedded in your product.

Onboarding tutorials that evolve based on user behavior

Suggest the next steps after user analysis (e.g., “Want to create a chart from this data?”)

Giving live performance tips during design or writing tasks

Tools: Notion AI, GrammarlyGO, Microsoft Copilot, Framer AI onboarding flows

Interaction Patterns:

Contextual suggestions triggered by user behavior

Progressive disclosure of advanced features

Multi-modal delivery: text, tooltip, chat, walkthrough

Learning from user preferences over time

😅 Challenges:

Helping without annoying

Supporting voice, chat, visual, and written instructions

Building credibility: when to speak up vs. stay silent

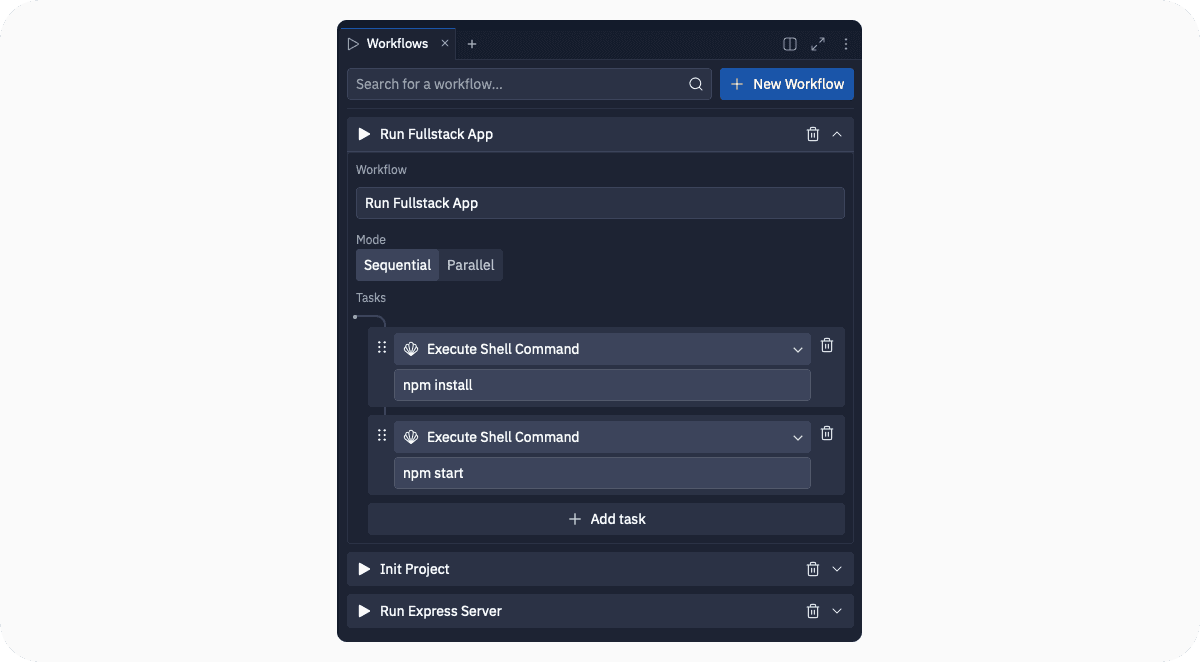

Replit

Gumloop

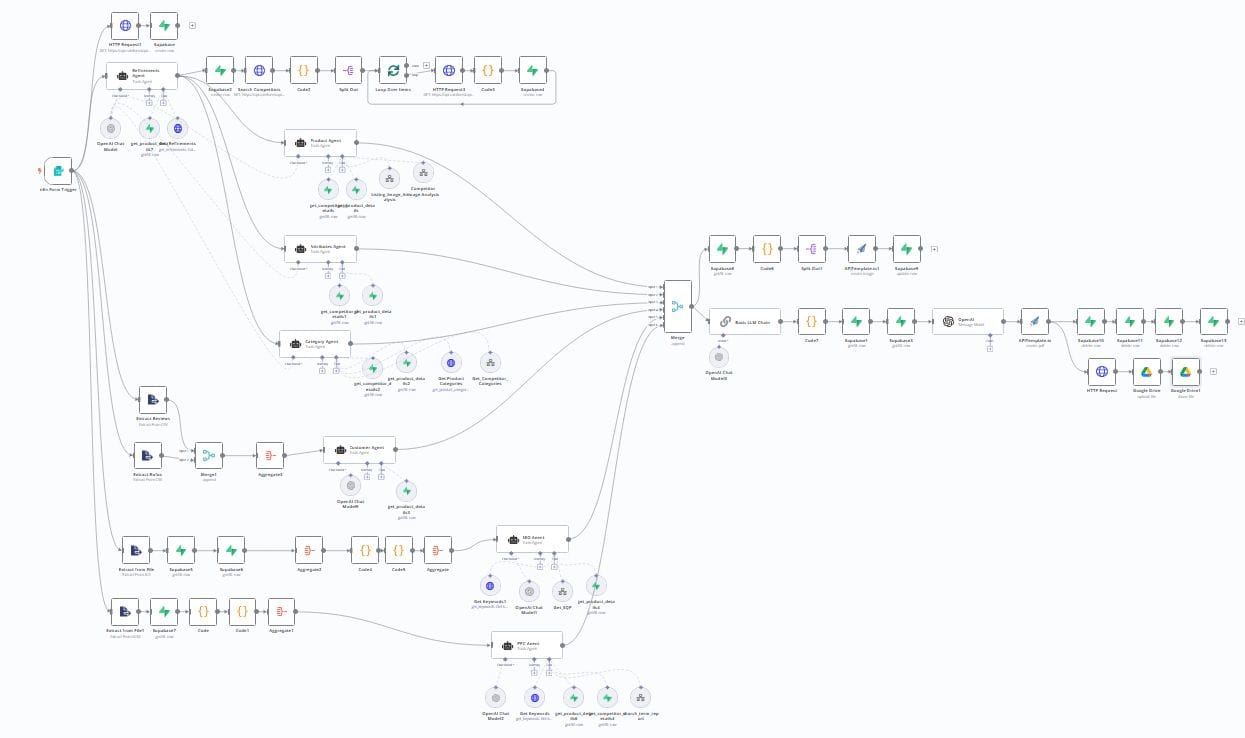

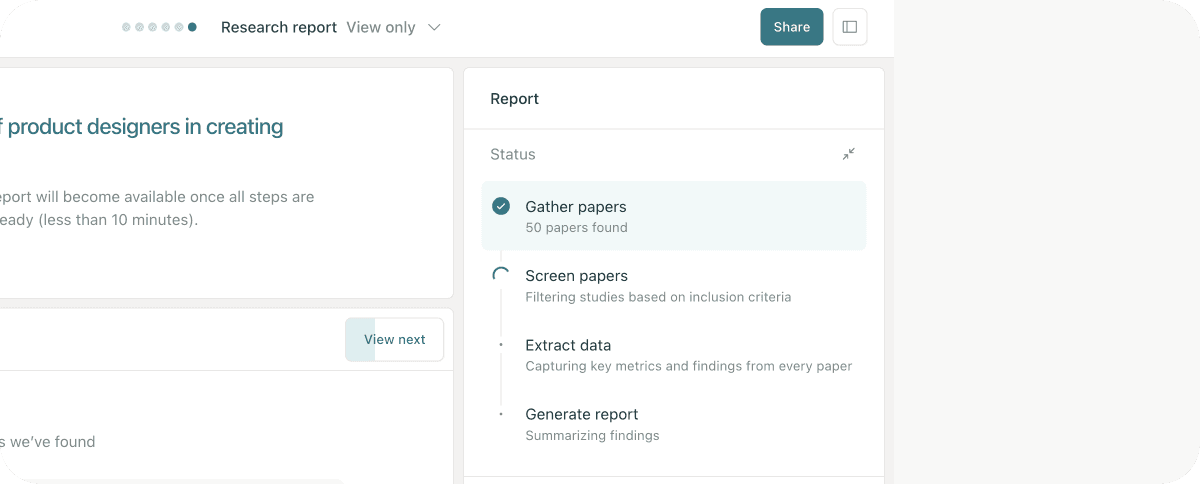

Multi-Agent Workflows

Systems that coordinate multiple AI agents to complete tasks together.

Examples:

Generating a report: one agent researches, another summarizes, a third formats the doc

Chained bots: detect mood → adjust copy tone → regenerate visual

Agents that critique or verify each other before showing the result

Tools: LangChain, AutoGen, Make.com, CrewAI, Zapier

Interaction Patterns:

Modular agents with defined roles and connectors

Branching logic for decisions

Logs, previews, and agent-specific debugging

Shared workflows editable by multiple users

😅 Challenges:

Explaining what each agent does and why

Debugging workflows when multiple agents are involved

Managing cost and latency

Preventing circular logic or agent conflict

Showing history and traceability clearly (+rollbacks)

Make.com

Source n8n

Replit

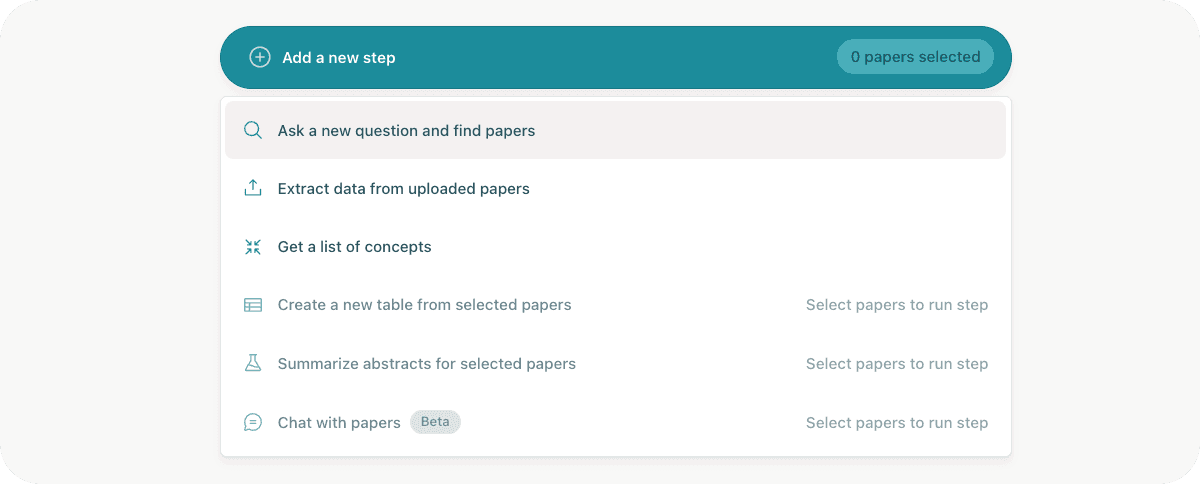

Defining steps

Showing next steps and confirming AI suggested ones.

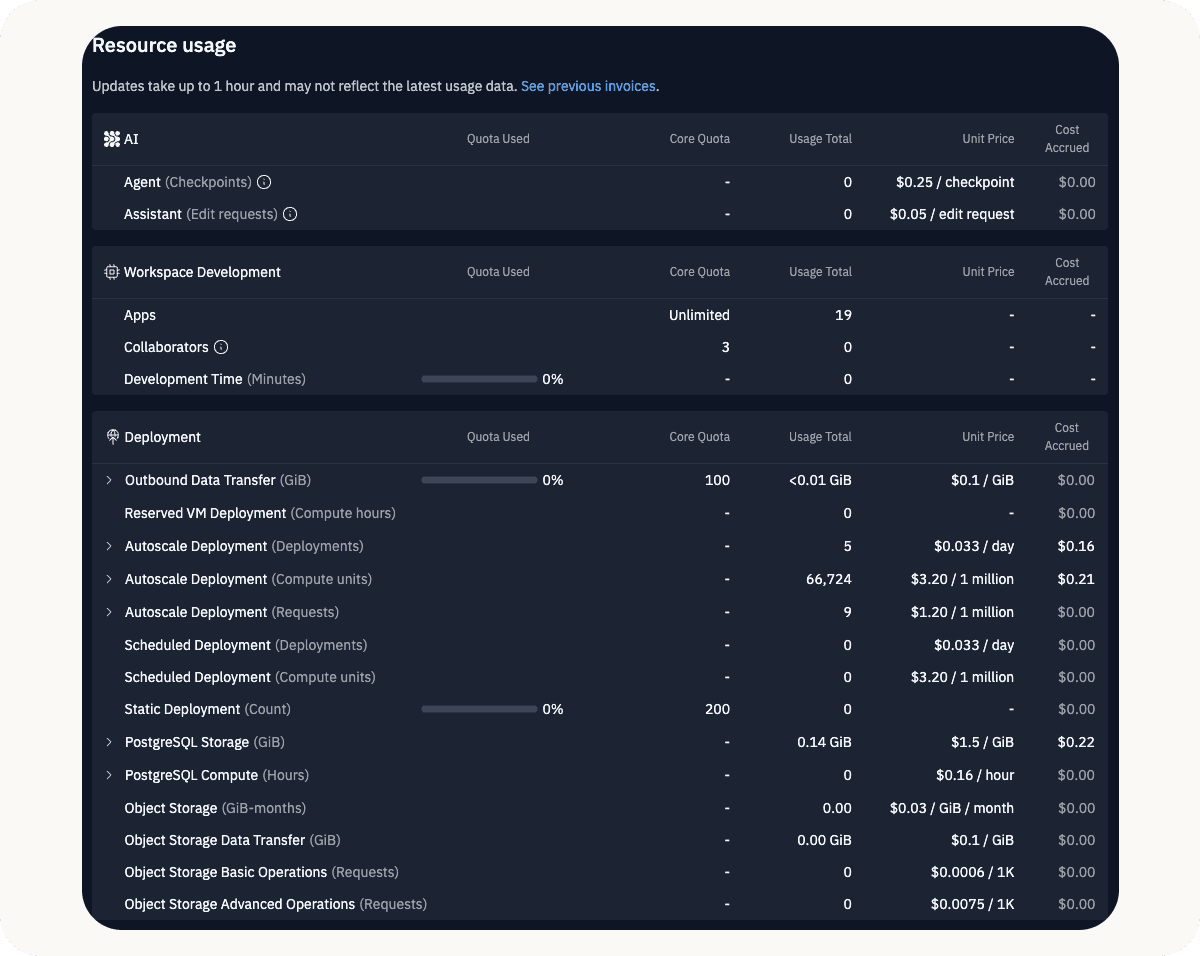

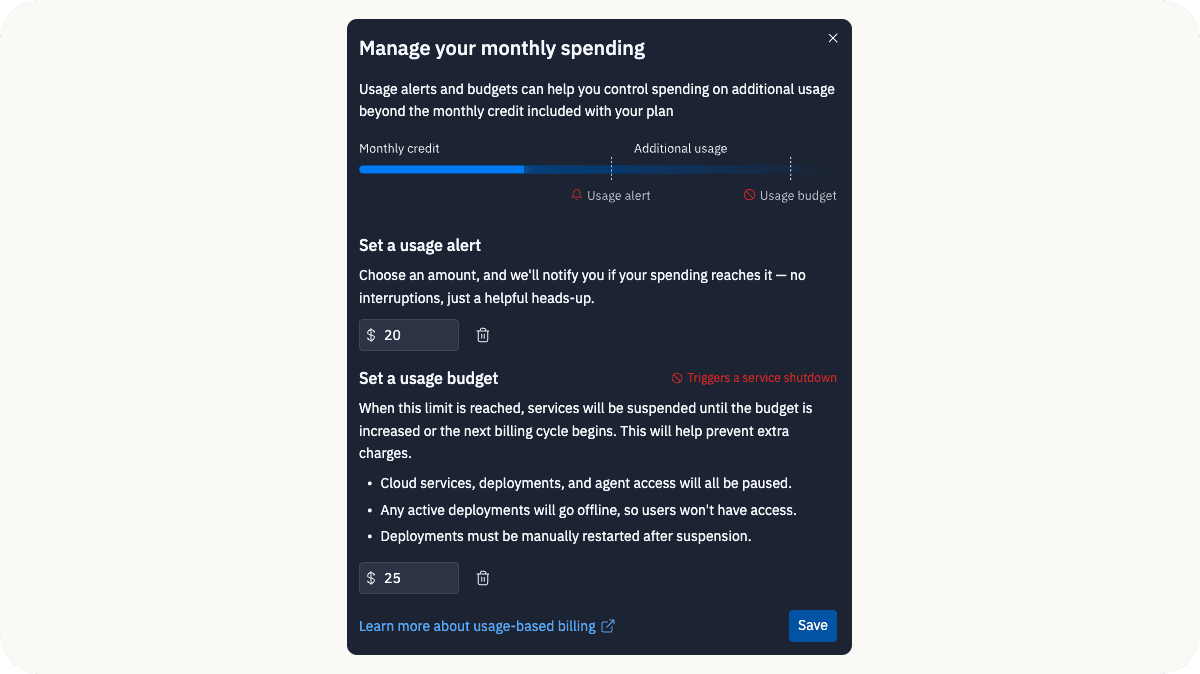

Managing credits

Connected with trust is also showing usage options.

All in all, we need to start adding:

AI component libraries (prompt boxes, loading indicators, feedback controls)

AI-specific design tokens -> update naming conventions

Accessibility expectations for AI-generated interactions

Decide how to document AI behaviors

Guidelines for context-aware components – how to prototype, test, and scale components that adapt to conversations and user behavior

Think about cognitive load when adding personalized/adaptive interfaces

Rules for what AI can create, change, and when attribution is needed

I'm Romina Kavcic. I coach design teams on implementing design systems, optimizing design processes, and developing design strategy.